ARTICLE AD BOX

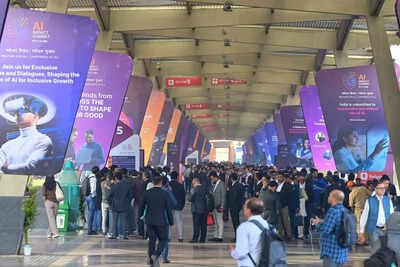

When India analyses satellite images, sweeps its borders and coastlines, draws up military strategy, maps national assets, monitors its power grid, detects cyberattacks and shores up UPI payment gateways — massive tech operations that run within servers to keep the wheels of daily life in motion — artificial intelligence (AI) is no longer optional.With tech and geopolitical embrace tightening, models interpreting sensitive information, pointing out anomalies, managing and interpreting data and assisting decision-making are increasingly controlling the synapses of the strategic nervous system.As the second-largest AI market in the world, India is the toast of the global tech community, evident in the turnout at the ongoing AI Impact Summit in Delhi.

But while that is a big economic opportunity for an aspiring superpower, there is a foundational question govt faces in adopting AI into the administrative and security framework: how much can India rely on foreign models? At the core of this question is data security.

Whether it’s governance or national security, govts use AI for deep analytics, for which vast amounts of data — public, sensitive and, for military use, strategic — need to be fed into AI models.

Much of the foundational AI infrastructure used globally, such as advanced chips, large language models and hyperscale cloud systems, is concentrated in the US and China, making them disproportionately influential in the AI universe.In India, therefore, there’s growing realisation about sovereign AI for national security. Unless it does, India’s use of AI for security, governance and other strategic purposes will be cautious and suboptimal.

India also needs AI that’s built, hosted and computed here to preserve the “strategic autonomy” of its foreign policy.In a recent report, ‘India’s AI Gambit’, Mumbai-based think tank Strategic Foresight Group (SFG) calls for an urgent decision by govt on whether India can regulate or control the import of AI models that may risk national security. The report also suggests exploring a mandatory national security assessment framework for models deployed from abroad.What are the threats?Sundeep Waslekar of SFG says because of AI, there are more asymmetric threats to national security. “National Security Council should check every foreign model that is deployed in India,” he says.Military veteran Lt Gen Satish Dua (retd), a counter-terrorism specialist and the man behind the 2016 Uri surgical strike, tells TOI that AI is useful for threat assessment and strategic decisionmaking. AI tools can analyse data from multiple sensors for weather-based artillery planning and predicting adversary behaviour.

“Doing all this manually takes a lot of time, so yes, AI tools can be useful there,” he says. But caution, he adds, needs to be applied on how much to trust foreign models with sensitive data.In a paper, ‘Beyond the Kinetic: Deconstructing warfare in the socio-technical cognitive battlespace (STCB)’, released in Jan, Lt Colonel Pavithran Rajan (retd) and former head of Northern Command Lt Gen D S Hooda (retd) argue that modern battlefields are increasingly shaped by technology and information manipulation.

This became very apparent during Operation Sindoor last year and the subsequent military confrontation with Pakistan, after which India decided to raise its first integrated battle group, Rudra, that fits the needs of modern warfare.“The application of AI is revolutionising STCB. This includes the weaponisation of AI-generated content (deepfakes, synthetic text) for disinformation campaigns, the use of ML for micro-targeting populations with tailored messaging, and the deployment of AI in autonomous weapons systems,” the paper states.Guarded use of AIMaking the case for sovereign AI models, Lt Col Rajan says, “AI will increasingly determine how states govern, secure themselves and project power. In such a world, sovereignty is no longer guaranteed by territory alone. It resides in control over data, algorithms and the cognitive infrastructures that bind them together. Those who treat AI as merely another sector will be governed by it. Those who recognise it as an instrument of power may still shape its terms.

”Former diplomat and convener of Centre for Research on Strategic and Security Issues (NatStrat) Pankaj Saran says AI’s own vulnerabilities are also a risk factor. When there is sovereign control on AI models, a country can effectively control these threats. “Security vulnerabilities are faced by AI systems themselves. AI can be used by attackers to automate and scale attacks, making defence more challenging,” he says.

He pointed to initiatives such as NATGRID’s AI-enabled Organised Crime Network Database, which aggregates law enforcement data for analysis, and said such systems require strict governance on security, access control and auditing.As a result, across ministries, AI adoption remains cautious. A source in the ministry of power says AI tools can help forecast generation capacity, particularly in renewable energy, but vulnerabilities need to be studied.

“Most of our systems are old and will need to be replaced if we want to use AI. But that is not the only issue. If we do it without addressing the weak points, just one cyber attack can switch off the entire grid,” the source adds.

Big ministries like defence, finance, education and health are similarly wary about deploying AI in core systems without robust safeguards.Who’s building Indian AI?Ministry of electronics and information technology (MeitY) officials say a key deliverable under the IndiaAI Mission is to build and support Indian foundational models. Additional secretary Abhishek Singh acknowledges that dependence carries strategic risk. “Not having our own model means the data goes to another geography and we are always dependent on it. It can also be used as a strategic tool to deny access,” he tells TOI . At the AI Impact Summit, sovereign AI models will also be showcased.Govt has shortlisted 12 startups to build sovereign AI models, including Sarvam AI, Bharat Gen, Gyan AI, Shodh AI, Intellihealth, Genloop, Socket, Fractal and Tech Mahindra. Among those seen as frontrunners are Bengaluru-based Sarvam AI, the IIT-Bombay-incubated BharatGen and Mumbaibased Fractal. Sarvam AI has released models such as Sarvam Vision and Bulbul V3, which the company claims outperform global competitors on certain benchmarks and are trained on 22 Indian language datasets.

Fractal has developed a healthcare model, Vaidya. Bharat Gen is building Bharat Sagar, a model aimed at public policy use cases.Singh describes five layers of the AI ecosystem: chips, data centres, servers, energy, and models and applications. “To be selfreliant, we will have to manage all this on our own,” he says.India has been aggressively making a gambit as a data centre hub, hoping that will give it logistical influence on the AI architecture that supports different operations globally.Founders of some AI startups building sovereign models, speaking on condition of anonymity, say infrastructure support, including access to GPUs (graphics processing units) and funding, has been slow. A Mumbai-based founder says his company has received only a fraction of the sanctioned funds under the AI mission and has to rely on its own resources.Open source, limited solutionMeitY released India AI Governance Guidelines in Nov 2025, outlining recommendations on risk identification, inter-agency collaboration and national security safeguards.

A MeitY source says that in govt systems, open-source models are downloaded and hosted on domestic servers rather than accessed via foreignhosted APIs. “For example, in govt when we are using something in NIC (National Informatics Centre), we don’t use any of the models that are hosted outside.

These are open-source models that we download and then host on our machines, on our local infrastructure. Then we offer the services here.

That way, the data resides in India,” the source says.Open-source use has limitations. While it protects data, AI controls remain with the provider of the LLM. So, the user must depend on the provider for upgrades, customisations, etc. In areas of national importance, this means a critical time lag between problem and solution.Prakash Kumar, former IAS officer and currently CEO of Wadhwani Centre for Digital Govt Transformation, says big companies in India that use AI models have pacts with companies like OpenAI and Anthropic to make sure their data does not go anywhere.

“As far as I know, govt does not have any such pact with AI companies so far,” he says.In the meantime, Saran says, India can draw lessons from European Union’s data protection regime. “India’s Digital Personal Data Protection Act is a step forward, but it needs clear guidelines for consent, minimisation and data retention limits,” he says, stressing cyber awareness and resilience across institutions and citizens.

1 hour ago

5

1 hour ago

5

English (US) ·

English (US) ·